Category: INSIGHTS

Las Vegas 2013

DefCon 21 Preview

“Adventures in Automotive Networks and Control Units” (Track 3)

(https://www.defcon.org/html/defcon-21/dc-21-schedule.html)

- We will briefly discuss the ISO / Protocol standards that our two automobiles used to communicate on the CAN bus, also providing a Python and C API that can be used to replicate our work. The API is pretty generic so it can easily be modified to work with other makes / models.

- The first type of CAN traffic we’ll discuss is diagnostic CAN messages. These types of message are usually used by mechanics to diagnose problems within the automotive network, sensors, and actuators. Although meant for maintenance, we’ll show how some of these messages can be used to physically control the automobile under certain conditions.

- The second type of CAN data we’ll talk about is normal CAN traffic that the car regularly produces. These types of CAN messages are much more abundant but more difficult to reverse engineer and categorize (i.e. proprietary messages). Although time consuming, we’ll show how these messages, when played on the CAN network, have control over the most safety critical features of the automobile.

- Finally we’ll talk about modifying the firmware and using the proprietary re-flashing processes used for each of our vehicles. Firmware modification is most likely necessary for any sort of persistence when attempting to permanently modify an automobile’s behavior. It will also show just how different this process is for each make/model, proving that ‘just ask the tuning community’ is not a viable option a majority of the time.

2013 ISS Conference, Prague

- Connect to anything anywhere in the area,

- Accept invalid SSL certificates,

- Use your Viber or What’s Up messenger to send messages (clear text protocols),

- Use non-encrypted protocols to check your email,

- Publicly display your name and the agency you represent unless asked to do so by a security representative wearing the proper badge

Why Vendor Openness Still Matters

|

Version numbers

|

Hosts Found

|

Vulnerable (SSH Key)

|

|

1.8-5a

|

1

|

Yes

|

|

1.8-6

|

2

|

Yes

|

|

2.0-0

|

110

|

Yes

|

|

2.0-0_a01

|

1

|

Yes

|

|

2.0-1

|

68

|

Yes

|

|

2.0-2 (patched)

|

50

|

No

|

Why sanitize excessed equipment

My passion for cybersecurity centers on industrial controllers–PLCs, RTUs, and the other “field devices.” These devices are the interface between the integrator (e.g., HMI systems, historians, and databases) and the process (e.g., sensors and actuators). Researching this equipment can be costly because PLCs and RTUs cost thousands of dollars. Fortunately, I have an ally: surplus resellers that sell used equipment.

FDA Safety Communication for Medical Devices

- Wireless heart and drug monitoring stations within emergency wards that have open WiFi connections; where anyone with an iPhone searching for an Internet connection can make an unauthenticated connection and have their web browser bring up the admin portal of the station.

- Remote surgeon support and web camera interfaces used for emergency operations brought down by everyday botnet malware because someone happened to surf the web one day and hit the wrong site.

- Internet auditing and scanning services run internationally and encountering medical devices connected directly to the Internet through routable IP addresses – being used as drop-boxes for file sharing groups (oblivious to the fact that it’s a medical device under their control).

- Common WiFi and Bluetooth auditing tools (available for android smartphones and tablets) identifying medical devices during simple “war driving” exercises and leaving the discovered devices in a hung state.

- Medial staff’s iPads without authentication or GeoIP-locking of hospital applications that “go missing” or are borrowed by kids and have applications (and games) installed from vendor markets that conflict with the use of the authorized applications.

- NFC from smartphone’s and payment systems that can record, playback and interfere with the communications of implanted medical devices.

Red Team Testing: Debunking Myths and Setting Expectations

When IOActive is asked to conduct a red team test, our main goal is to accurately and realistically simulate these types of adversaries. So the first, and probably most important, element of a red team test is to define the threat model:

Think of it as an “Ocean’s Eleven” type of attack that can include:

This is full scope testing. Unlike in other types of engagement, all or almost all assets are “in scope”.

We gain a lot of insights from the actions and reactions of the organization’s security team to a red team attack. These are the types of insights that matter the most to us:

- Observing how your monitoring capabilities function during the intelligence gathering phase. The results can be eye opening and provide tremendous value when assessing your security posture.

- Measuring how your first (and second) responders in information security, HR, and physical security work together. Teamwork and coordination among teams is crucial and the assessment allows you to build processes that actually work.

- Understanding what happens when an attacker gains access to your assets and starts to exfiltrate information or actually steals equipment. The red team experience can do wonders for your disaster recovery processes.

Tools of the Trade – Incident Response, Part 1: Log Analysis

- Log files

- Memory images

- Disk images

- OSSEC(3)

- log2timeline(6)

(1) BYO-IR: Build Your Own Incident Response: http://www.slideshare.net/wremes/isc2-byo-ir

(2) http://computer-forensics.sans.org/community/downloads

(3) http://www.ossec.net

(4) e.g. APT1 Indicators of Compromise @ http://intelreport.mandiant.com/

(5) http://blog.commandlinekungfu.com/

(6) http://log2timeline.net/

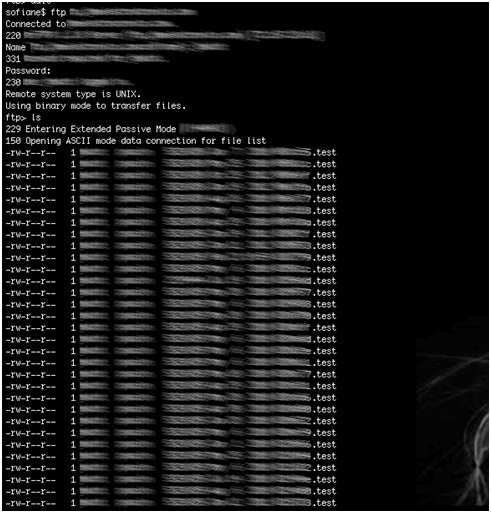

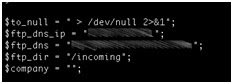

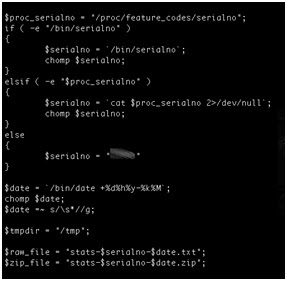

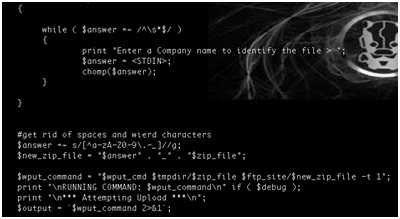

Industrial Device Firmware Can Reveal FTP Treasures!

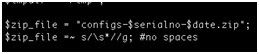

- Using the device serial number as part of a filename on a relatively accessible ftp server.

- Hardcoding the ftp server credentials within the firmware.

- Naming conventions disclose device serial numbers and company names. In addition, these serial numbers are used to generate unique admin passwords for each device.

- Credentials for the vendor’s ftp server are hard coded within device firmware. This would allow anyone who can reverse engineer the firmware to access sensitive customer information such as device serial numbers.

- Anonymous write access to the vendor’s ftp server is enabled. This server contains sensitive customer information, which can expose device configuration data to an attacker from the public Internet. The ftp server also contains sensitive information about the vendor.

- Sensitive and critical data such as industrial device configuration files are transferred in clear text.

- A server containing sensitive customer data and running an older version of ftp that is vulnerable to a number of known exploits is accessible from the Internet.

- Using Clear text protocols to transfer sensitive information over internet

- Use secure naming conventions that do not involve potentially sensitive information.

- Do not hard-code credentials into firmware (read previous blog post by Ruben Santamarta).

- Do not transfer sensitive data using clear text protocols. Use encrypted protocols to protect data transfers.

- Do not transfer sensitive data unless it is encrypted. Use high-level encryption to encrypt sensitive data before it is transferred.

- Do not expose sensitive customer information on public ftp servers that allow anonymous access.

- Enforce a strong patch policy. Servers and services must be patched and updated as needed to protect against known vulnerabilities.