- Pentesting Smart Grid Web Apps from Justin Searle

- Vulnerabilities in Industrial Control Systems from ICS-CERT

- AMI Security from John Sawyer and Don Weber

- Project Basecamp: News from Camp 4 from Reid Wightman

- Denial of Service from Eireann Leverett

- Securing Critical Infrastructure from Francis Cianfrocca

Tag: ioactive labs

TLS Renegotiation and Load Balancers

Hackito Ergo Sum: Not Just Another Conference

- All of our speakers.

- All of our sponsors (who help us and don’t ask much in exchange).

- The incredible team behind Hackito that spends countless hours in conference calls on their weekends to make things happen during an entire year so that others can present or party.

- Our respected Programming Committee of Death (you guys have our highest respect; thank you for allowing us to steal some of your time in return).

- Every single hacker who comes to Hackito, often from very far, to contribute and be part of the Hackito experience. FX was right: the conference is the people!!

Enter the Dragon(Book), Part 1

See if you can find the backdoor, I’ll post the explanation and details on the flaw soon.

// eBookLib.cpp : main project file.

// Project requirements

// Add/Remove/Query eBooks

// One code file (KISS in effect)

//

//

// **** Mostly generated from Visual Studio project templates ****

//

#define WIN32_LEAN_AND_MEAN

#define _WIN32_WINNT 0x501

#include <windows.h>

#include <stdio.h>

#include <wchar.h>

#include <msclrmarshal.h>

#using <Microsoft.VisualC.dll>

#using <System.dll>

#using <System.Core.dll>

using namespace System;

using namespace System::IO;

using namespace System::Threading;

using namespace System::Threading::Tasks;

using namespace System::Reflection;

using namespace System::Diagnostics;

using namespace System::Globalization;

using namespace System::Collections::Generic;

using namespace System::Security::Permissions;

using namespace System::Runtime::InteropServices;

using namespace System::IO::MemoryMappedFiles;

using namespace System::IO;

using namespace System::Runtime::CompilerServices;

using namespace msclr;

using namespace msclr::interop;

//

// General Information about an assembly is controlled through the following

// set of attributes. Change these attribute values to modify the information

// associated with an assembly.

//

[assembly:AssemblyTitleAttribute(“eBookLib”)];

[assembly:AssemblyDescriptionAttribute(“”)];

[assembly:AssemblyConfigurationAttribute(“”)];

[assembly:AssemblyCompanyAttribute(“Microsoft”)];

[assembly:AssemblyProductAttribute(“eBookLib”)];

[assembly:AssemblyCopyrightAttribute(“Copyright (c) Microsoft 2010”)];

[assembly:AssemblyTrademarkAttribute(“”)];

[assembly:AssemblyCultureAttribute(“”)];

//

// Version information for an assembly consists of the following four values:

//

// Major Version

// Minor Version

// Build Number

// Revision

//

// You can specify all the value or you can default the Revision and Build Numbers

// by using the ‘*’ as shown below:

[assembly:AssemblyVersionAttribute(“1.0.*”)];

[assembly:ComVisible(false)];

[assembly:CLSCompliantAttribute(true)];

[assembly:SecurityPermission(SecurityAction::RequestMinimum, UnmanagedCode = true)];

////////////////////////////////////////////////////////////////////////////////////////////////////////////////

// Native structures used from legacy system,

// define the disk storage for our ebook,

//

// The file specified by the constructor is read from and loaded automatically, it is also auto saved when closed.

////////////////////////////////////////////////////////////////////////////////////////////////////////////////

enum eBookFlag

{

NOFLAG = 0,

ACTIVE = 1,

PENDING_REMOVE = 2

};

typedef struct _eBookAccountingData

{

// Binary Data, may include nulls

char PurchaseOrder[ACCOUNTING_SIZE];

char RecieptData[ACCOUNTING_SIZE];

size_t PurchaseOrderLength;

size_t RecieptDataLength;

} eBookAccountingData, *PeBookAccountingData;

typedef struct _eBookPublicData

{

wchar_t ISBN[BUFSIZ];

wchar_t MISC[BUFSIZ];

wchar_t ShortName[BUFSIZ];

wchar_t Author[BUFSIZ];

wchar_t LongName[BUFSIZ];

wchar_t PathToFile[MAX_PATH];

int Rating;

int SerialNumber;

} eBookPublicData, *PeBookPublicData;

typedef struct _eBook

{

eBookFlag Flag;

eBookAccountingData Priv;

eBookPublicData Pub;

} eBook, *PeBook;

// define managed analogues for native/serialized types

namespace Client {

namespace ManagedEbookLib {

[System::FlagsAttribute]

public enum class ManagedeBookFlag : int

{

NOFLAG = 0x0,

ACTIVE = 0x1,

PENDING_REMOVE = 0x2,

};

public ref class ManagedEbookPublic

{

public:

__clrcall ManagedEbookPublic()

{

ISBN = MISC = ShortName = Author = LongName = PathToFile = String::Empty;

}

Int32 Rating;

String^ ISBN;

String^ MISC;

Int32 SerialNumber;

String^ ShortName;

String^ Author;

String^ LongName;

String^ PathToFile;

};

public ref class ManagedEbookAccounting

{

public:

__clrcall ManagedEbookAccounting()

{

PurchaseOrder = gcnew array<Byte>(0);

RecieptData = gcnew array<Byte>(0);

}

array<Byte>^ PurchaseOrder;

array<Byte>^ RecieptData;

};

public ref class ManagedEbook

{

public:

__clrcall ManagedEbook()

{

Pub = gcnew ManagedEbookPublic();

Priv = gcnew ManagedEbookAccounting();

}

ManagedeBookFlag Flag;

ManagedEbookPublic^ Pub;

ManagedEbookAccounting^ Priv;

array<Byte^>^ BookData;

};

}

}

using namespace Client::ManagedEbookLib;

// extend marshal library for native/managed inter-op

namespace msclr {

namespace interop {

template<>

inline ManagedEbookAccounting^ marshal_as<ManagedEbookAccounting^, eBookAccountingData> (const eBookAccountingData& Src)

{

ManagedEbookAccounting^ Dest = gcnew ManagedEbookAccounting;

if(Src.PurchaseOrderLength > 0 && Src.PurchaseOrderLength < sizeof(Src.PurchaseOrder))

{

Dest->PurchaseOrder = gcnew array<Byte>((int) Src.PurchaseOrderLength);

Marshal::Copy(static_cast<IntPtr>(Src.PurchaseOrder[0]), Dest->PurchaseOrder, 0, (int) Src.PurchaseOrderLength);

}

if(Src.RecieptDataLength > 0 && Src.RecieptDataLength < sizeof(Src.RecieptData))

{

Dest->RecieptData = gcnew array<Byte>((int) Src.RecieptDataLength);

Marshal::Copy(static_cast<IntPtr>(Src.RecieptData[0]), Dest->RecieptData, 0, (int) Src.RecieptDataLength);

}

return Dest;

};

template<>

inline ManagedEbookPublic^ marshal_as<ManagedEbookPublic^, eBookPublicData> (const eBookPublicData& Src) {

ManagedEbookPublic^ Dest = gcnew ManagedEbookPublic;

Dest->Rating = Src.Rating;

Dest->ISBN = gcnew String(Src.ISBN);

Dest->MISC = gcnew String(Src.MISC);

Dest->SerialNumber = Src.SerialNumber;

Dest->ShortName = gcnew String(Src.ShortName);

Dest->Author = gcnew String(Src.Author);

Dest->LongName = gcnew String(Src.LongName);

Dest->PathToFile = gcnew String(Src.PathToFile);

return Dest;

};

template<>

inline ManagedEbook^ marshal_as<ManagedEbook^, eBook> (const eBook& Src) {

ManagedEbook^ Dest = gcnew ManagedEbook;

Dest->Priv = marshal_as<ManagedEbookAccounting^>(Src.Priv);

Dest->Pub = marshal_as<ManagedEbookPublic^>(Src.Pub);

Dest->Flag = static_cast<ManagedeBookFlag>(Src.Flag);

return Dest;

};

}

}

// Primary user namespace

namespace Client

{

namespace ManagedEbooks

{

// “Store” is Client::ManagedEbooks::Store()

public ref class Store

{

private:

String^ DataStore;

List<ManagedEbook^>^ Books;

HANDLE hFile;

// serialization from disk

void __clrcall LoadDB()

{

Books = gcnew List<ManagedEbook^>();

eBook AeBook;

DWORD red = 0;

marshal_context^ x = gcnew marshal_context();

hFile = CreateFileW(x->marshal_as<const wchar_t*>(DataStore), GENERIC_READ | GENERIC_WRITE, FILE_SHARE_READ | FILE_SHARE_WRITE | FILE_SHARE_DELETE, 0, OPEN_ALWAYS, 0, 0);

if(hFile == INVALID_HANDLE_VALUE)

return;

do {

ReadFile(hFile, &AeBook, sizeof(eBook), &red, NULL);

if(red == sizeof(eBook))

Books->Add(marshal_as<ManagedEbook^>(AeBook));

} while(red == sizeof(eBook));

}

// scan hay for anything that matches needle

bool __clrcall MatchBook(ManagedEbook ^hay, ManagedEbook^ needle)

{

// check numeric values first

if(hay->Pub->Rating != 0 && hay->Pub->Rating == needle->Pub->Rating)

return true;

if(hay->Pub->SerialNumber != 0 && hay->Pub->SerialNumber == needle->Pub->SerialNumber)

return true;

// scan each string

if(!String::IsNullOrEmpty(hay->Pub->ISBN) && hay->Pub->ISBN->Contains(needle->Pub->ISBN))

return true;

if(!String::IsNullOrEmpty(hay->Pub->MISC) && hay->Pub->MISC->Contains(needle->Pub->MISC))

return true;

if(!String::IsNullOrEmpty(hay->Pub->ShortName) && hay->Pub->ShortName->Contains(needle->Pub->ShortName))

return true;

if(!String::IsNullOrEmpty(hay->Pub->Author) && hay->Pub->Author->Contains(needle->Pub->Author))

return true;

if(!String::IsNullOrEmpty(hay->Pub->LongName) && hay->Pub->LongName->Contains(needle->Pub->LongName))

return true;

if(!String::IsNullOrEmpty(hay->Pub->PathToFile) && hay->Pub->PathToFile->Contains(needle->Pub->PathToFile))

return true;

return false;

}

// destructor

__clrcall !Store()

{

Close();

}

// serialization to disk happens here

void __clrcall _Close()

{

if(hFile == INVALID_HANDLE_VALUE)

return;

SetFilePointer(hFile, 0, NULL, FILE_BEGIN);

for each(ManagedEbook^ book in Books)

{

eBook save;

DWORD wrote=0;

marshal_context^ x = gcnew marshal_context();

ZeroMemory(&save, sizeof(save));

save.Pub.Rating = book->Pub->Rating;

save.Pub.SerialNumber = book->Pub->SerialNumber;

save.Flag = static_cast<eBookFlag>(book->Flag);

swprintf_s(save.Pub.ISBN, sizeof(save.Pub.ISBN), L”%s”, x->marshal_as<const wchar_t*>(book->Pub->ISBN));

swprintf_s(save.Pub.MISC, sizeof(save.Pub.MISC), L”%s”, x->marshal_as<const wchar_t*>(book->Pub->MISC));

swprintf_s(save.Pub.ShortName, sizeof(save.Pub.ShortName), L”%s”, x->marshal_as<const wchar_t*>(book->Pub->ShortName));

swprintf_s(save.Pub.Author, sizeof(save.Pub.Author), L”%s”, x->marshal_as<const wchar_t*>(book->Pub->Author));

swprintf_s(save.Pub.LongName, sizeof(save.Pub.LongName), L”%s”, x->marshal_as<const wchar_t*>(book->Pub->LongName));

swprintf_s(save.Pub.PathToFile, sizeof(save.Pub.PathToFile), L”%s”, x->marshal_as<const wchar_t*>(book->Pub->PathToFile));

if(book->Priv->PurchaseOrder->Length > 0)

{

pin_ptr<Byte> pin = &book->Priv->PurchaseOrder[0];

save.Priv.PurchaseOrderLength = min(sizeof(save.Priv.PurchaseOrder), book->Priv->PurchaseOrder->Length);

memcpy(save.Priv.PurchaseOrder, pin, save.Priv.PurchaseOrderLength);

pin = nullptr;

}

if(book->Priv->RecieptData->Length > 0)

{

pin_ptr<Byte> pin = &book->Priv->RecieptData[0];

save.Priv.RecieptDataLength = min(sizeof(save.Priv.RecieptData), book->Priv->RecieptData->Length);

memcpy(save.Priv.RecieptData, pin, save.Priv.RecieptDataLength);

pin = nullptr;

}

WriteFile(hFile, &save, sizeof(save), &wrote, NULL);

if(wrote != sizeof(save))

return;

}

CloseHandle(hFile);

hFile = INVALID_HANDLE_VALUE;

}

protected:

// destructor forwards to the disposable interface

virtual __clrcall ~Store()

{

this->!Store();

}

public:

// possibly hide this

void __clrcall Close()

{

_Close();

}

// constructor

__clrcall Store(String^ DataStoreDB)

{

DataStore = DataStoreDB;

LoadDB();

}

// add ebook

void __clrcall Add(ManagedEbook^ eBook)

{

Books->Add(eBook);

}

// remove ebook

void __clrcall Remove(ManagedEbook^ eBook)

{

Books->Remove(eBook);

}

// get query list

List<ManagedEbook^>^ __clrcall Query(ManagedEbook^ eBook)

{

List<ManagedEbook^>^ rv = gcnew List<ManagedEbook^>();

for each(ManagedEbook^ book in Books)

{

if(MatchBook(book, eBook))

rv->Add(book);

}

return rv;

}

};

}

}

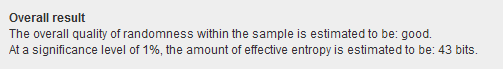

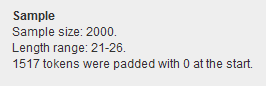

Estimating Password and Token Entropy (Randomness) in Web Applications

Entropy

1. http://en.wikipedia.org/wiki/Entropy_%28information_theory%29

https://fbcdn-sphotos-a.akamaihd.net/hphotos-ak-ash4/398297_10140657048323225_750784224_11609676_1712639207_n.jpg

I can still see your actions on Google Maps over SSL

A while ago, yours truly gave two talks on SSL traffic analysis: one at 44Con and one at RuxCon. A demonstration of the tool was also given at last year’s BlackHat Arsenal by two of my co-workers. The presented research and tool may not have been as groundbreaking as some of the other talks at those conferences, but attendees seemed to like it, so I figured it might make some good blog content.

Traffic analysis is definitely not a new field, neither in general nor when applied to SSL; a lot of great work has been done by reputable research outlets, such as Microsoft Research with researchers like George Danezis. What recent traffic analysis research has tried to show is that there are enormous amounts of useful information to be obtained by an attacker who can monitor the encrypted communication stream.

A great example of this can be found in the paper with the slightly cheesy title Side-Channel Leaks in Web Applications: a Reality Today, a Challenge Tomorrow. The paper discusses some approaches to traffic analysis on SSL-encrypted web applications and applies them to real-world systems. One of the approaches enables an attacker to build a database that contains traffic patterns of the AutoComplete function in drop-down form fields (like Google’s Auto Complete). Another great example is the ability to—for a specific type of stock management web application—reconstruct pie charts in a couple of days and figure out the contents of someone’s stock portfolio.

After discussing these attack types with some of our customers, I noticed that most of them seemed to have some difficulty grasping the potential impact of traffic analysis on their web applications. The research papers I referred them to are quite dry and they’re also written in dense, scientific language that does nothing to ease understanding. So, I decided to just throw some of my dedicated research time out there and come up with a proof of concept tool using a web application that everyone knows and understands: Google Maps.

Since ignorance is bliss, I decided to just jump in and try to build something without even running the numbers on whether it would make any sense to try. I started by running Firefox and Firebug in an effort to make sense of all the JavaScript voodoo going on there. I quickly figured out that Google Maps works by using a grid system in which PNG images (referred to as tiles) are laid out. Latitude and longitude coordinates are converted to x and y values depending on the selected zoom level; this gives a three dimensional coordinate system in which each separate (x, y, z)-triplet represents two PNG images. The first image is called the overlay image and contains the town, river, highway names and so forth; the second image contains the actual satellite data.

Once I had this figured out the approach became simple: scrape a lot of satellite tiles and build a database of the image sizes using the tool GMapCatcher. I then built a tool that uses libpcap to approximate the image sizes by monitoring the SSL encrypted traffic on the wire. The tool tries to match the image sizes to the recorded (x,y,z)-triplets in the database and then tries to cluster the results into a specific region. This is notoriously difficult to do since one gets so many false positives if the database is big enough. Add to this the fact that it is next to impossible to scrape the entire Google Maps database since, first, they will ban you for generating so much traffic and, second, you will have to store many petabytes of image data.

With a little bit of cheating—I used a large browser screen so I would have more data to work with—I managed to make the movie Proof of Concept – SSL Traffic Analysis of Google Maps.

As shown in the movie, the tool has a database that contains city profiles including Paris, Berlin, Amsterdam, Brussels, and Geneva. The tool runs on the right and on the left is the browser accessing Google Maps over SSL. In the first attempt, I load the city of Paris and zoom in a couple of times. On the second attempt I navigate to Berlin and zoom in a few times. On both occasions the tool manages to correctly guess the locations that the browser is accessing.

Please note that it is a shoddy proof of concept, but it shows the concept of SSL traffic analysis pretty well. It also might be easier to understand for less technically inclined people, as in “An attacker can still figure out what you’re looking at on Google Maps” (with the addendum that it’s never going to be a 100% perfect and that my shoddy proof of concept has lots of room for improvement).

For more specific details on this please refer to the IOActive white paper Traffic Analysis on Google Maps with GMaps-Trafficker or send me a tweet at @santaragolabs.

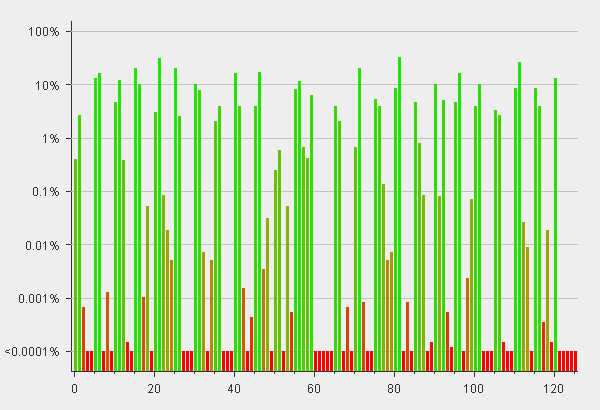

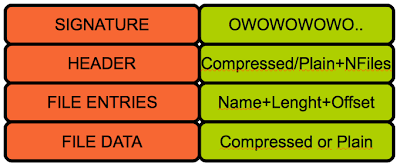

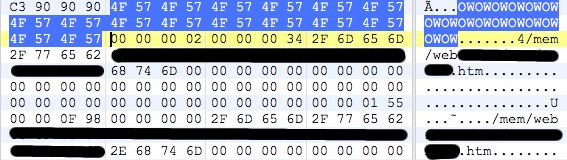

Solving a Little Mystery

Firmware analysis is a fascinating area within the vast world of reverse engineering, although not very extended. Sometimes you end up in an impasse until noticing a minor (or major) detail you initially overlooked. That’s why sharing methods and findings is a great way to advance into this field.

There are a few things here worth mentioning:

- The header is not necessarily 12 but 8 so the third field seems optional.

- The first 4 bytes look like a flag field that may indicate, among other things, whether a file data will be compressed or not (1 = Compressed, 2 = Plain)

- The signature can vary between firmwares since it is defined by the constant ‘HTTP_UNIQUE_SIGNATURE’ , in fact, we may find this signature twice inside a firmware; the first one due to the .h where it is defined (close to other strings such as the webserver banner )and the second one already as part of the MemFS.

A free Windows Vulnerability for the NSA

Some months ago at Black Hat USA 2011 I presented this interesting issue in the workshop “Easy and Quick Vulnerability Hunting in Windows,” and now I’m sharing it with all people a more detailed explanation in this blog post.

C:WindowsInstaller there are many installer files (from already installed applications) with what appear to be random names. When run, some of these installer files (like Microsoft Office Publisher MUI (English) 2007) will automatically elevate privileges and try to install when any Windows user executes them. Since the applications are already installed, there’s no problem, at least in theory.

C:UsersusernameAppDataLocalTemp, which is the temporary folder for the current user. The created file is named Hx????.tmp (where ???? seem to be random hex numbers), and it seems to be a COM DLL from Microsoft Help Data Services Module, in which its original name is HXDS.dll. This DLL is later loaded by msiexec.exe process running under the System account that is launched by the Windows installer service during the installation process.

msiexec.exe process generates an MD5 hash of the DLL file and compares it with a known-good MD5 hash value that is read from a file located in C:WindowsInstaller, which is only readable and writable by System and Administrators accounts.

C:windowstemp folder, possibly allowing the same attack.

Common Coding Mistakes – Wide Character Arrays

char string version, respectively. If you’ve done any development on Windows systems, you’ll definitely be no stranger to wide character strings.

int) from the packet and performs a bounds check. If the check passes, a string of the specified length (assumed to be a UTF-16 string) is copied from the packet buffer to a fresh wide char array on heap.

[ … ]

if(packet->dataLen > 34 || packet->dataLen < sizeof(wchar_t)) bailout_and_exit();

size_t bufLen = packet->dataLen / sizeof(wchar_t);

wchar_t *appData = new wchar_t[bufLen];

memcpy(appData, packet->payload, packet->dataLen);

[ … ]

char array. But consider what would happen if packet->dataLen was an odd number. For example, if packet->dataLen = 11, we end up with size_t bufLen = 11 / 2 = 5 since the remainder of the division will be discarded.

memcpy() copies 11 bytes. Since five wide chars on Windows is 10 bytes (and 11 bytes are copied), we have an off-by-one overflow. To avoid this, the modulo operator should be used to check that packet->dataLen is even to begin with; that is:

if(packet->dataLen % 2) bailout()

len + 1 is used instead of the len + 2 that is required to account for the extra NULL byte(s) needed to terminate wide char arrays, for example:

int alloc_len = len + 1;

wchar_t *buf = (wchar_t *)malloc(alloc_len);

memset(buf, 0x00, len);

wcsncpy(buf, srcBuf, len);

srcBuf had len wide chars in it, all of these would be copied into buf, but wcsncpy() would not NULL terminatebuf. With normal character arrays, the added byte (which will be a NULL because of the memset) would be the NULL terminator and everything would be fine. But since wide char strings need either a two- or four-byte NULL terminator (Windows and UNIX, respectively), we now have a non-terminated string that could cause problems later on.

int destLen = (stringLen * (sizeof(wchar_t)) + sizeof(wchar_t);

wchar_t *destBuf = (wchar_t *)malloc(destLen);

MultiByteToWideChar(CP_UTF8, 0, srcBuf, stringLen, destBuf, destLen);

[ do something ]

MultiByteToWide API is documented at <http://msdn.microsoft.com/en-us/library/windows/desktop/dd319072%28v=vs.85%29.aspx>.

MultiByteToWideChar is the length of the destination buffer in wide characters, not in bytes, as the call above was done. Our destination length is out by a factor of two here (or four on UNIX-like systems, generally) and ultimately we can end up overrunning the buffer. These sorts of mistakes result in overflows and they’re surprisingly common.

char string functions, like wcsncpy(), for example:unsigned int destLen = (stringLen * sizeof(wchar_t)) + sizeof(wchar_t);

wchar_t destBuf[destLen];

memset(destBuf, 0x00, destLen);

wcsncpy(destBuf, srcBuf, sizeof(destBuf));

sizeof(destuff) for maximum destination size would be fine if we were dealing with normal characters, this doesn’t work for wide character buffers. Instead, sizeof(destBuf) will return the number of bytes indestBuf, which means the wcsncpy() call above it can end up copying twice as many bytes to destBuf as intended—again, an overflow.

char equivalent string manipulation functions are also prone to misuse in the same ways as their normal char counterparts—look for all the wide char equivalents when auditing such functions as swprintf,wcscpy, wcsncpy, etc. There also are a few wide char-specific APIs that are easily misused; take, for example,wcstombs(), which converts a wide char string to a multi-byte string. The prototype looks like this:size_t wcstombs(char *restrict s, const wchar_t *restrict pwcs, size_t n);

n bytes have been written to s or when a NULL terminator is encountered in pwcs (the source buffer). If an error occurs, i.e. a wide char in pwcs can’t be converted, the conversion stops and the function returns (size_t)-1, else the number of bytes written is returned. The MSDN considerswcstombs() to be deprecated, but there are still a few common ways to mess when using it, and they all revolve around not checking return values.

int i;

i = wcstombs( … ) // wcstombs() can return -1

buf[i] = L'';

n, the destination buffer won’t be NULL terminated, so any string operations later carried out on or using the destination buffer could run past the end of the buffer. Two possible consequences are a potential page fault if an operation runs off the end of a page or potential memory corruption bugs, depending on howdestbuf is usedlater . Developers should avoid wcstombs() and use wcstombs_s() or another, safer alternative. Bottom line: always read the docs before using a new function since APIs don’t always do what you’d expect (or want) them to do.

char and normal char functions. A good example would be incorrectly using strlen() on a wide character string instead of wcslen()—since wchar strings are chock full of NULL bytes, strlen() isn’t going to return the length you were after. It’s easy to see how this can end up causing security problems if a memory allocation is done based on a strlen() that was incorrectly performed on a wide char array.

wchars. In the examples above, I have assumed sizeof(wchar_t) = 2; however, as I’ve said a few times, this is NOT necessarily the case at all, since many UNIX-like systems have sizeof(wchar_t) = 4.

wchar_t *destBuf = (wchar_t *)malloc(32 * 2 + 2);

wcsncpy(destBuf, srcBuf, 32);

destBuff for 32 wide chars + NULL terminator (66 bytes). But as soon as you run this on a Linux box—where wide chars are four bytes—you’re going to get wcsncpy()writing 4 * 32 + 2 = 130 bytes and resulting in a pretty obvious overflow.

sizeof(wchar_t) to find out.

char functions, and ensure the math is right when allocating and copying memory. Make sure you check your return values properly and, most obviously, read the docs to make absolutely sure you’re not making any mistakes or missing something important.Security Mechanism of PIC16C558,620,621,622

Last month we talked about the structure of an AND-gate layed out in Silicon CMOS. Now, we present to you how this AND gate has been used in Microchip PICs such as PIC16C558, PIC16C620, PIC16C621, PIC16C622, and a variety of others.

If you wish to determine if this article relates to a particular PIC you may be in possession of, you can take an windowed OTP part (/JW) and set the lock-bits. If after 10 minutes in UV, it still says it’s locked, this article applies to your PIC.

IF THE PART REMAINS LOCKED, IT CANNOT BE UNLOCKED SO TEST AT YOUR OWN RISK.

The picture above is the die of the PIC16C558 magnified 100x. The PIC16C620-622 look pretty much the same. If there are letters after the final number, the die will be most likely, “shrunk” (e.g. PIC16C622 vs PIC16C622A).

Our area of concern is highlighted above along with a zoom of the area.

When magnified 500x, things become clear. Notice the top metal (M2) is covering our DUAL 2-Input AND gate in the red box above.We previously showed you one half of the above area. Now you can see that there is a pair of 2-input AND gates. This was done to offer two security lock-bits for memory regions (read the datasheet on special features of the CPU).Stripping off that top metal (M2) now clearly shows us the bussing from two different areas to keep the part secure. Microchip went the extra step of covering the floating gate of the main easilly discoverable fuses with metal to prevent UV from erasing a locked state. The outputs of those two fuses also feed into logic on the left side of the picture to tell you that the part is locked during a device readback of the configuration fuses.

This type of fuse is protected by multiple set fuses of which only some are UV-erasable.

The AND gates are ensuring all fuses are erased to a ‘1’ to “unlock” the device.

What does this mean to an attacker? It means, go after the inal AND gate if you want to forcefully unlock the CPU. The outputs of the final AND gate stage run underneather VDD!! (The big mistake Microchip made). Two shots witha laser-cutter and we can short the output stages “Y” from the AND-gate to a logic ‘1’ allowing readback of the memories (the part will still say it is locked).Stripping off the lower metal layer (M1) reveils the Poly-silicon layer.

What have we learned from all this?

-

- A lot of time and effort went into the design of this series of security mechanisms.

- These are the most secure Microchip PICs of ALL currently available. The latest ~350-400nm 3-4 metal layer PICs are less secure than the

- Anything made by human can be torn down by human!

:->